Pixel Farm has posted a tech preview of their new LIDAR solution. LIDAR is increasingly becoming important in large scale tracking solutions, but in reality it has also been a major problem. LIDAR scans are vast, so vast they can often not even fit into memory and certainly working with them is very complex. Early problems included artists opening LIDAR scans and literally having no idea which direction the camera was facing or at what scale the camera was at…leading to amusing treasure hunts to find some LIDAR ‘element’ or object that made sense so one could even understand what the file had captured!

Pixel Farm has posted a tech preview of their new LIDAR solution. LIDAR is increasingly becoming important in large scale tracking solutions, but in reality it has also been a major problem. LIDAR scans are vast, so vast they can often not even fit into memory and certainly working with them is very complex. Early problems included artists opening LIDAR scans and literally having no idea which direction the camera was facing or at what scale the camera was at…leading to amusing treasure hunts to find some LIDAR ‘element’ or object that made sense so one could even understand what the file had captured!

Yet the rewards of having a lens distortion-free survey of the space is enormously helpful to both camera trackers and animation effects artists.

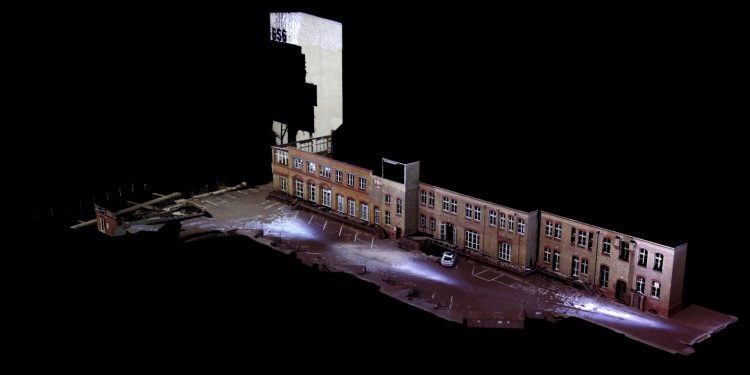

Victor Wolansky has done classes on LIDAR at fxphd based on the great work of Florian Gellinger, visual effects supervisor at Rise FX. Rise FX did the LIDAR scans we used, and work in Europe as both a VFX house and they have a sister company just devoted to LIDAR specialist services called Point Cloud 9.

We asked Victor in April about the use of LIDAR for an earlier article:

“Use of LIDAR scans are very helpful to solve very weird motions, since as far as you have exact XYZ coordinates for each tracking point you are using, camera position can be solved anyway, even without much or almost null parallax.

Something else where LIDAR becomes extremely useful is when you have very large sets and a full size CGI set will be built based on that, then if you have multiple shots, each shot and each camera will be perfectly aligned against each other and the CGI model. That survey information can also be used to align regular auto tracked shots.”

New Solution

The Pixel Farm ‘tech demo’ so impressed us that we wanted to ask them some questions – not least of which was how did they do it – and how edited was the video to hide previous problems camera tracking departments had struggled with? Mike Seymour spoke with Daryl Shail, VFX Product Manager.

fxphd: How do you cope with the vast data sets and the memory problem?

Shail: It’s two-fold. When we import the raw scan we optimize it into our own internal format for processing. This does not affect the integrity of the data in any way. Second we’ve built in two levels of proxy that affect how the point cloud is displayed in the cinema when static, and interactively being moved in the perspective and orthogonal views.

fxphd: Are there edits in the video – for speed cuts? The solve is amazingly fast

Shail: The camera solve was accelerated for the sake of the video. In real-time it took roughly 5 seconds to calibrate. The purpose of the video was intended as a technology demonstration as opposed to a tutorial on the actual process, so I opted to keep it moving along…

fxphd: Do you need other orientation or scale guides setup we did not see?

Shail: No. Since this is based on the Survey Solver, there can be only one mathematical solution possible to make the tracked points lock against their surveyed points in the LIDAR, so once solved, the scene is automatically scaled and oriented.

fxphd: Will this be at Siggraph to see?

Shail: Unfortunately we don’t have plans to attend Siggraph this year.

fxphd: When might this be released? Will there be a public or private beta?

Shail: Imminently. We don’t do ‘public’ betas. I’m going to get a cut into Victor’s hands. His input was very helpful in the development of the new tool.