Luma Pictures is one of several VFX studios branching out into VR work. They recent delivered two VR experiences for car-maker Infiniti, one a hybrid live-action/CG driving VR experience in locations including Italy’s Stelvio Pass and the Atlantic Ocean road in Norway, and the other fully CG immersion into the creation of an Infiniti concept design. We asked Luma CG supervisor Dave White about how the visual effects for the experiences were made.

fxg: For the experience that contains live action, The Dream Road, can you talk about how your plates were acquired?

White: We had a five camera rig – five REDs – that were pointing north, south, east, west and one facing up. The one that was facing up was in HDRX mode and was just capturing a higher dynamic range for an animated sky capture. It wasn’t as fully dynamic of a range as traditional HDR but it gave us a pretty good latitude to work with that we were then able to have animated lighting as the car traveled through the distance. Then the other four cameras had 8mm lenses and were able to give us our panoramic view.

fxg: How did you approach the stitching process?

White: We created a sphere by generating a lat-long stitch out of those four cameras using a batch process in PTGui. Then we rendered our car with a spherical lens out of Arnold and comp’d that over the top. Because we were using REDs, with the parallax disparity, it wasn’t very nodal at all so there was a fair amount of discrepancy between each camera in terms of its nodal point, but we were able to hide some of the areas that were not exactly perfect behind some of the pillars of the car and we used those as natural barriers. Our horizon line lined up really well. For stitching we would set up one frame that worked well, get those points set up and batch it out for the entire sequence.

fxg: How was the CG car interior rendered?

White: We started with CAD data that was supplied from Infiniti and we re-topo’d it and made it nice and clean, but all our own textures and displacement maps. A lot of it is about art direction and placing the camera really where we wanted it. We wanted it to be at a proper height of a head, so that someone would feel naturally in a car. But then you have be careful how far you sit the head back against the headrest, because if you turn around it’s too close and it can be really jarring, so we would have to push it forward a little bit.

Then we built out some arms that we attached. When we were doing the acquisition we had a witness cam – a GoPro – and we recorded all of the dials and the driver’s arm movement. Then we had a team of animators matchmove the driver’s motions while it was sync’d to the plates, and that gave us really accurate driving.

fxg: There was a simulator version of the experience at the Pebble Beach Automotive Week – how did that come into play?

White: CXC Simulations made a simulator seat that would move and flow with the animation of the car, and they also had the steering wheel rotate in sync with our animation. So that when the viewer put their hands on the steering wheel it would co-ordinate with our animation – it was a way to sync people into our VR experience. If you had the arms driving the steering wheel in VR and then the hands of the viewer were on their lap or in the pockets, it would feel a little like an out of body experience, so we wanted to sync those two things.

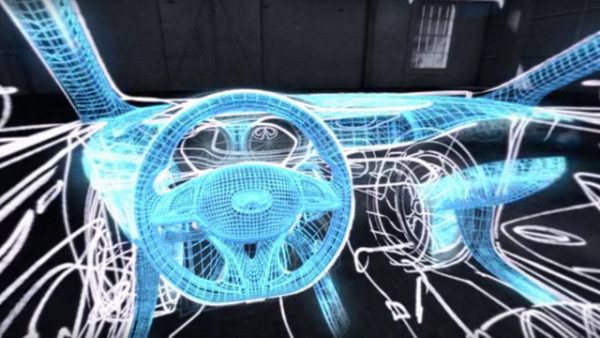

fxg: The other experience, From Pencil to Metal, is fully CG – how was it created?

White: That was interesting because it was a very creative brief we were given by the agency. They came up with a cool idea of showing the whole process of the design of a car, and they wanted people to see the evolution from a sketch of a thought all the way through to the final completion of a car. And what would that feel like to be inside that process? A sketch would normally be on a flat piece of paper so what does it feel like to be inside of a drawing and see a wireframe come over that as it would go into a digital stage of design. They also built up a clay car and we added that effect in. It had to be as realistic to what the process is and what it might look like, but also to give it a poetic feel – so we were free to break the rules a little bit.

fxg: Again, did you have the CAD files, and what was it like making something that could be rendered?

White: Arnold handles the huge amounts of geometry amazingly well. Some of our rendering was not that different than anything else we do. We don’t hold back in terms of resolution whether that be geo resolution or texture. We’re just finding more and more that the tools can handle it. It did become tricky once we were handling a 4K render and 60 fps. Especially when you’re inside the car and it’s a spherical lens, the car really wraps every inch of the frame, and it becomes a giant render – but we were still able to keep render times pretty reasonable.

Some of the difficulties in terms of complexity was that the car itself is made of hundreds of pieces of hi-res geo, and getting all of those things to transition in a three-dimensional and VR space was pretty tricky. Some of our effects were driving by mattes – so for example for the clay effect we would take the car geo and then we’d bring it into NUKE. We found that even that step was challenging with that resolution of the car and we used UDIM UV spaces – we had about 70 UDIM tiles, and we had to create mattes out of NUKE that would render out across all the UDIM tiles where we could project our rotos in 3D space onto the geometry in NUKE and then output animated maps through 70 UDIMs.

That become very labor intensive and we had to write a lot of code to optimize it and get that back into Maya as mattes that could then drive both alphas of the clay crawling across the surface. Then we would often use all three channels of our mattes out of NUKE. We create a red channel for the alpha and then a leading edge of the front end of the matte would be green and we’d feather it out and add a little noise in and that would drive a displacement which would bulge up the leading edge of the clay. And then for other cases we might have a blue channel that would drive some particle emissions. Getting that stuff from Maya into NUKE where we could really creatively design in the crawling pattern of those mattes, and then getting all those mattes back into Maya into shaders and rendered out through an Oculus lens was one of the biggest overall challenges.

fxphd has VR training on offer right now – check out our Virtual Reality Bootcamp and VR Production & Post: Live Action courses.

fxg: How did you review the work?

White: What we tried to do was render out for lighting and composition – render out stills as much as possible and get them into the Oculus and we would review lighting. We’d do a brief lighting review on a flat screen but then we’d always get right into Oculus as fast as we could on stills.

For animation, particularly in NUKE, we created an array of cameras that was radially wrapping around and we would just render those out really fast usually at 30 fps just to keep it more streamlined. And we’d be able to see pretty much every angle. It wasn’t exactly a spherical image – it was a stitched together panorama of our interior view. That would allow us to review matte timing and then we would get that into the renders quickly after that.

For CG animation, that’s a challenge we’re still facing. We proposed creating the camera rig inside of Maya which could playblast up to three angles and we’d have an auto-stitch that would stitch that really fast. But once again because the turnaround time was pretty quick, we mostly just ran our playblasts really quickly as occlusion renders and then reviewed those in Oculus. It was for timing review mostly.

fxg: VR is still relatively new – how did you ramp up in terms of artists and training?

White: The one thing that really works in our advantage in getting people up to speed is that the artists generally think it’s really cool. We have people here who have made 10 consecutive blockbuster films but the VR thing flips things upside down. People were getting giddy about it like it was re-boot for them. To see an artist who may have worked on the CG car put on a headset and a giant grin come across their face and seem them get all excited – it’s very cool.

Sometimes the funniest things during production to watch are people with the headsets on. The amount of accidents that happen – with the steering wheel in front of you, you are definitely motivated to grab the steering wheel but you only have a desk in front of you. More people whack their hands on the desk more than we thought they would!

You can download the Infiniti Driver’s Seat VR app on iTunes or Google Play and find out more about Luma Pictures at their website.

Note: Robert Moggach also worked as a freelance supervisor to assist on the project and some of the pictures above are his. He loved working on the project and he noted in an email to that Big Look 360 also did a great job contributing on this shoot. Big Look designed and built the 360 rig using their own Red cameras and in-house fabrication to build the custom milled rig. The rig was built in Dallas, Texas and travelled with the production. Big Look 360 generally do events such as event production “for NFL etc. but on occasion their team get involved in commercial projects”, Moggach explained.